Once you have fetched the tweets using the library “tweepy”, the next step is to visualize the information using wordcloud.

But since twitter text contains a lot of unwanted text(URL, usernames etc.), some extra pre-processing is required to clean the text and get it into a good format.

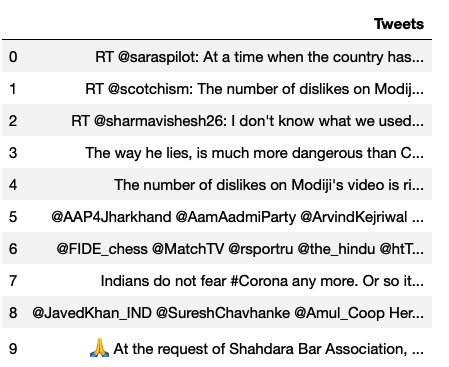

Creating Sample Tweets

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

import pandas as pd Sample_Tweets=pd.DataFrame(columns=['Tweets'],data=['RT @saraspilot: At a time when the country has crossed 3.5 million corona virus cases, even the Health minister after months has realised n…', "RT @scotchism: The number of dislikes on Modiji's video is rising like Corona virus cases now. \n\nIT CELL SHOULD WORK HARD TO FLATTEN THE CU…", "RT @sharmavishesh26: I don't know what we used to talk about before Corona virus.", 'The way he lies, is much more dangerous than Corona Virus #मोदीजी_के_झूठ https://t.co/pIT5yaDJwt', "The number of dislikes on Modiji's video is rising like Corona virus cases now. \n\nIT CELL SHOULD WORK HARD TO FLATTEN THE CURVE.", '@AAP4Jharkhand @AamAadmiParty @ArvindKejriwal @AAPRajasthan @AAPTELANGANA @aartic02 @AAPChhattisgarh @AAPUttarPradesh @AAPUttarakhand Photo me chehra dikhane ke chakkar me shriman mask lagane ka sahi Tarika bhool gaye. With this attitude these people will be contributing in spreading of virus. How it will help in identifying Corona? Is it substitute for Corona test?', "@FIDE_chess @MatchTV @rsportru @the_hindu @htTweets @DainikBhaskar @sportexpress @airnewsalerts We'll win the battle of Corona Virus as well....in the same way...\n🇮🇳🤝🇷🇺", 'Indians do not fear #Corona any more. Or so it seems. Slowly precautions are going out of the window. I see so many people heading to crowded places. Social distancing is reducing by the day. What gives people so much confidence against the virus?\n#coronainindia #coronavirus', '@JavedKhan_IND @SureshChavhanke @Amul_Coop Here corona virus spreads on Ram Navami\n\n* Here corona virus spreads on Krishna Janmashtami\n\n* Here corona virus spreads on Ganesh Chaturthi\n\nBut on Muharram procession, Corona virus will hide in fear! https://t.co/9viQcskudv', '🙏 At the request of Shahdara Bar Association, 600 lawyers and staff distributed homeopathy medicines to increase the immunity approved by Ministry of AYUSH to prevent corona virus.\n\nDr.Dc Prajapati https://t.co/UlYAz7O8UY'] ) Sample_Tweets |

Sample Output

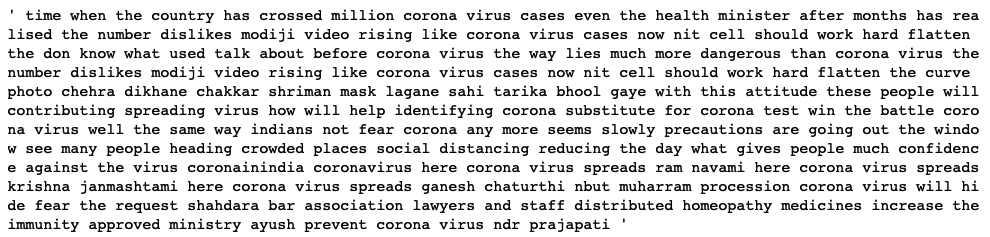

Pre-processing text data

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

# Extracting only the Tweet text from the data frame Tweet_Texts=Sample_Tweets['Tweets'].values # Converting the text column as a single string for wordcloud Tweets_String=str(Tweet_Texts) # Tweet Text cleaning import re # Converting the whole text to lowercase Tweet_Texts_Cleaned = Tweets_String.lower() # Removing the twitter usernames from tweet string Tweet_Texts_Cleaned=re.sub(r'@\w+', ' ', Tweet_Texts_Cleaned) # Removing the URLS from the tweet string Tweet_Texts_Cleaned=re.sub(r'http\S+', ' ', Tweet_Texts_Cleaned) # Deleting everything which is not characters Tweet_Texts_Cleaned = re.sub(r'[^a-z A-Z]', ' ',Tweet_Texts_Cleaned) # Deleting any word which is less than 3-characters mostly those are stopwords Tweet_Texts_Cleaned= re.sub(r'\b\w{1,2}\b', '', Tweet_Texts_Cleaned) # Stripping extra spaces in the text Tweet_Texts_Cleaned= re.sub(r' +', ' ', Tweet_Texts_Cleaned) Tweet_Texts_Cleaned |

Sample Output

Creating Wordcloud

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# Plotting the wordcloud # you can specify fonts, stopwords, background color and other options import matplotlib.pyplot as plt # Creating the custom stopwords customStopwords=list(STOPWORDS)+ ['cases','corona','virus','people','will'] wordcloudimage = WordCloud( max_words=100, max_font_size=500, font_step=2, stopwords=customStopwords, background_color='white', width=1000, height=720 ).generate(Tweet_Texts_Cleaned) plt.figure(figsize=(15,7)) plt.axis("off") plt.imshow(wordcloudimage) wordcloudimage plt.show() |

Sample Output

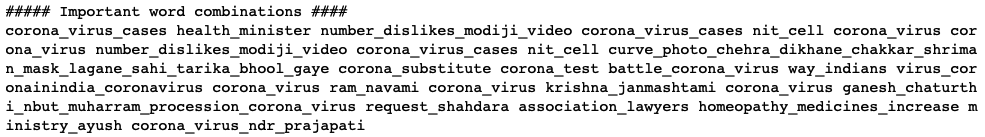

Bigram/Trigram Wordcloud

Using textblob library we can find the important combination of words in the text and generate wordcloud for those combinations.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# Finding the important word combinations using textblob from textblob import TextBlob # Converting the sample text to a blob SampleTextInBlobFormat = TextBlob(Tweet_Texts_Cleaned) # Finding the noun phrases (important keywords combination) in the text # This can help to find out what entities are being talked about in the given text NounPhrases=SampleTextInBlobFormat.noun_phrases # Creating an empty list to hold new values # combining the noun phrases using underscore to visualize it as wordcloud NewNounList=[] for words in NounPhrases: NewNounList.append(words.replace(" ", "_")) # Converting list into a string to plot wordcloud NewNounString=' '.join(NewNounList) print('##### Important word combinations ####') print(NewNounString) |

Sample Output

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# Plotting the wordcloud %matplotlib inline import matplotlib.pyplot as plt from wordcloud import WordCloud, STOPWORDS # Creating a custom list of stopwords customStopwords=list(STOPWORDS) + ['less','Trump','American','politics','country'] wordcloudimage = WordCloud( max_words=50, font_step=2 , max_font_size=500, stopwords=customStopwords, background_color='black', width=1000, height=720 ).generate(NewNounString) plt.figure(figsize=(20,8)) plt.imshow(wordcloudimage) plt.axis("off") plt.show() |

Sample Output

Author Details

Lead Data Scientist

Farukh is an innovator in solving industry problems using Artificial intelligence. His expertise is backed with 10 years of industry experience. Being a senior data scientist he is responsible for designing the AI/ML solution to provide maximum gains for the clients. As a thought leader, his focus is on solving the key business problems of the CPG Industry. He has worked across different domains like Telecom, Insurance, and Logistics. He has worked with global tech leaders including Infosys, IBM, and Persistent systems. His passion to teach inspired him to create this website!